Introduction

Microsoft’s Data-tier Application Packages (dacpacs) provide a nice mechanism for deploying your database schema and keeping your environments in sync. SSDT provides the ability to publish SQL Server projects via Visual Studio; however, in a professional setting you will want an automated and repeatable way to deploy your dacpacs. My tool of choice is Octopus Deploy. I will show you how to ingrate the two.

The SSDT Project

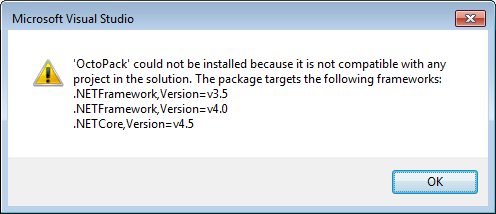

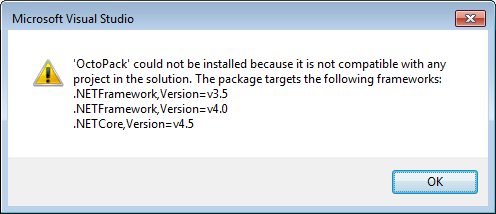

SSDT Sql Server projects present a bit of an issue when it comes to adding functionality to them: you can’t install NuGet packages.

This affects us in two way. The first part is that in my organization, we like to package our Octopus Deploy.ps1 and configuration transforms into a NuGet so that we can easily add them to our various projects, which we can’t do with database projects. The second issue is that you can’t install the OctoPack NuGet.

Deployment Script

To work around the first issue you must manually add a Deploy.ps1 file to the root of the project.

To script the deployment of a dacpac we are going to call out to SqlPackage.exe. Here is the template that I use:

$TargetConnectionString = $OctopusParameters["ConnectionString"]

$smartDefaults = $OctopusParameters["GenerateSmartDefaults"]

$dataLoss = $OctopusParameters["BlockOnPossibleDataLoss"]

if (!$smartDefaults) {

$smartDefaults = "False"

}

if (!$dataLoss) {

$dataLoss = "True"

}

if (!$TargetConnectionString) {

throw "Target connection parameter missing!"

}

Write-Host "Deploying database now..." -NoNewline

New-Alias -Name sqlpackage -Value "C:\Program Files (x86)\Microsoft SQL Server\110\DAC\bin\sqlpackage.exe"

sqlpackage /Action:Publish /SourceFile:"[[DACPAC_NAME]].dacpac" /tcs:$TargetConnectionString `

/p:GenerateSmartDefaults=$smartDefaults /p:BlockOnPossibleDataLoss=$dataLoss

Write-Host "deployed"

To populate the script, you have to replace [[DACPAC_NAME]] with the name of your dacpac file and ensure the path to sqlpackage.exe is correct for the server you are deploying to.

The first three lines retrieve variables from Octopus. The first is the connection string. The next two are some common parameters that you might find helpful. Please refer to the SSDT documentation to read more about the BlockOnPossibleDataLoss and GenerateSmartDefaults options. If they are not specified, the script will default to safe values (the SSDT defaults).

Passing Additional Variables

Sometimes you need additional variables in your dacpac project. For example, suppose that your database scripts reference another database. If that database has a different name in various environments, you will need to vary this per install (note: I don’t recommend ever doing this, but sometimes that is just how things are). Because the database name changes, you need some way to pass it in from Octopus.

For example purposes let’s assume that our database is called OtherDatabase_dev in our development enviornment and OtherDatabase_prod in our production environment. Our main database contains a script that needs to reference that database, for example:

CREATE PROCEDURE [dbo].[Procedure1]

AS

SELECT * FROM [OtherDatabse_dev].[dbo].[Customers]

RETURN 0

The first thing that we need to do change the database name to a variable. SQLCMD variables use the syntax $(Name).

CREATE PROCEDURE [dbo].[Procedure1]

AS

SELECT * FROM [$(OtherDatabse)].[dbo].[Customer]

RETURN 0

Now if you compile your dacpac, you will get a warning that Warning 1 SQL71562: Procedure: [dbo].[Procedure1] has an unresolved reference to object [$(OtherDatabase)].[dbo].[Customer]. You can either ignore this error, or add a reference to the database.

Add a Reference

There are two ways to provide a reference to your “OtherDatabase”. If it is also part, or can be part of your solution, you can add a reference to the other project in the solution:

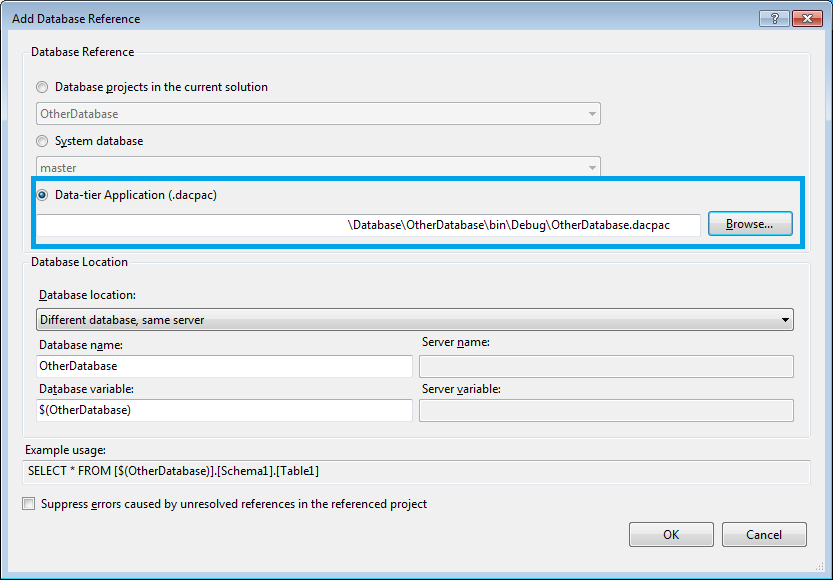

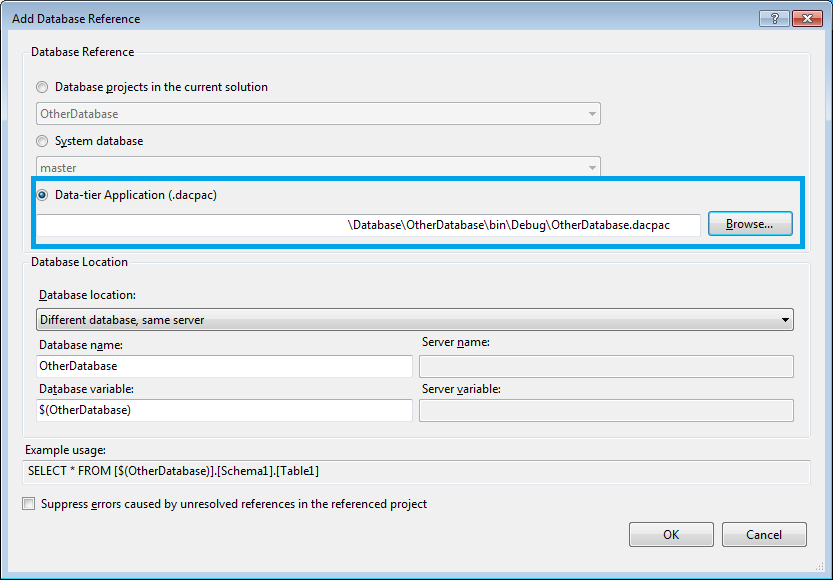

Once you click, the next dialog will show up:

Here you want to select Database projects in the current solution and point it to OtherDatabase. You also want to specify where the database will be located in your environment. Your options are:

- Same Database

- Different Database, same server

- Different Database, different server

Here I will assume we are using the second option. To be honest, I don’t know what affect the Database name field has. It will default to the name of your project. For the Database variable field enter the variable name you wish to use.

Now if you compile solution, the warning will disappear.

The alternate way to add a database reference is to refer directly to another dacpac. I have used this when the two database projects are in different solutions. You need to do the following:

- Build the other database to produce a dacpac

- Copy the dacpac into your source tree (and add to source control).

- Reference the dacpac.

To reference the dacpac, follow the same steps as before except this time you want to select Data-tier Application (.dacpac) from the list of options. Browse to the dacpac file. The other options remain the same.

Again, compiling the solution will see the warning eliminated.

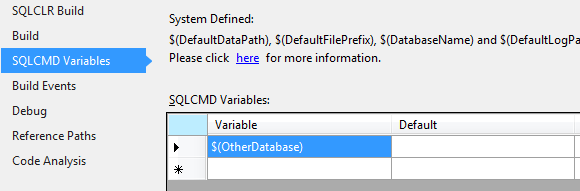

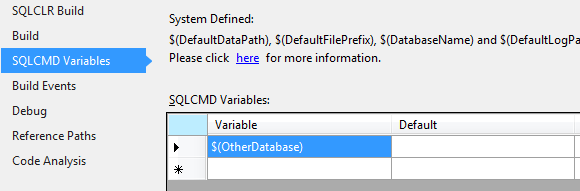

Adding the Variable

Now we can actually add the variable that will get populated from Octopus. Right-click the project and select Properties. From the properties dialog, click on the SQLCMD Variables tab. In the table of variables, add your variable name:

You can also add a default value. I recommend putting your development value in there.

Lastly, we need to augment the deployment script to retreive the value from Octopus and pass it along to the dacpac. To the top of the retrieve the value with something similar to:

$otherDatabase = $OctopusParameters["OtherDatabase.Name"]

At the end of the sqlpackage invocation pass in the variable:

/v:OtherDatabase="$otherDatabase"

The above is SQLCMD variable syntax. You can pass as many of these to SqlPacakge as you like. The syntax is /v:Name=Value

Packaging for Octopus

Since we can’t add OctoPack to the project, we have to get creative here. How to implement the package is heavily influenced by how you will be automating your build. You might be using TFS, TeamCity, psake or something else. Essentially, you just need to get the dacpac file and the Deploy.ps1 file into a NuGet.

For example, if you were using psake, you might put octo.exe into your projects folder and call it directly:

.\octo.exe pack –id=Database –basePath=bin\Debug –version=1.0.0.0

In our environment, we use TFS Build. We run “Custom After Microsoft Common Targets” extensions as I have described previously.

With our common targets, we override the Dacpac’s version, copy the dacpac and dlls to a staging folder and then call the CreateOctoPackPackage task with our version and the location of the staging folder.

If you are interested in additional details of how we implement the custom build, please leave a comment.

Deploying with Octopus

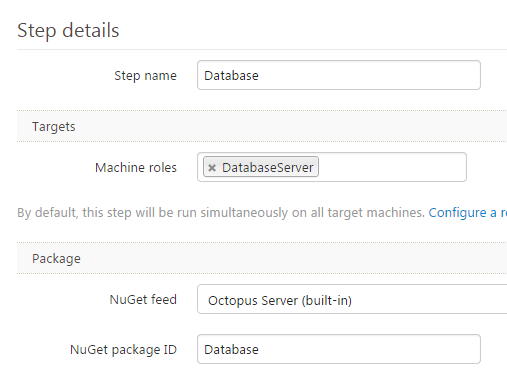

Finally, we are ready to configure Octopus Deploy to deploy the database. If you don’t have a Project in Octopus, create one now.

Open your project and click on the Process tab:

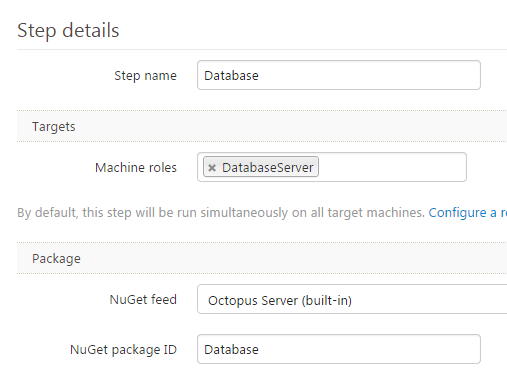

Click Add step and select Deploy a NuGet package from the list.

In the Step details add the Step name, Machine roles and NuGet package ID. In my case, the NuGet package ID is always the .NET project name and I label the step with the same value. The machine role is also typically DatabaseServer but will, of course, be specific to your environment.

Now click on the variables tab. There are to variables you must enter:

- ConnectionString

- OtherDatabase.Name

You can also optionally add BlockOnPossibleDataLoss and GenerateSmartDefaults.

Conclusion

Dacpacs provide a pretty slick way to deploy a database schema and keep your environments in sync (although they are not without their issues, which I will write about at a later date). Deploying them with Octopus Deploy is straightforward and only requires a basic PowerShell script. Many advanced features are available SQLCMD variables and SqlPackage parameters, all of which can be controlled from the Octopus interface.