Disclaimer

The process that follows is what I used to successfully deploy a DNN site with Octopus Deploy. I am not entirely convinced that it is the best way to accomplish the goal, but it does work. At the end I will lay out an a potential alternative method. However, it is not something I have implemented.

Introduction

The first step is the process was to convert the DNN website to a .NET web application. This is obviously the most controversial point in the overall methodology. Converting is both non-trivial and introduces issues when upgrading to newer versions of DNN. However we were faced with a dilemma: work DNN into our existing build and deployment process, or create a new process just for DNN. We chose the former.

Our main concern was generating the NuGet package for Octopus in an automated fashion. Since we already had tooling to do this with TFS Build, it made sense to at least try to fit DNN into that that tooling.

Converting DNN to a Web Application

Conversion

The general process of converting a website to a web application is fairly straight forward and documented at MSDN: Converting a Web Site Project to a Web Application Project in Visual Studio.

However, DNN is a very large project and after the conversion was complete there were still several build errors related to name conflicts. Essentially, DNN has multiple pages, with the same name, scattered throughout the folder hierarchy. I resolved these by changing the code-behind classes’ namespaces.

For example, there are several Login.ascx controls:

- DesktopModules\AuthenticationServices\DNN\Login.ascx

- DesktopModules\Admin\Authentication\Login.ascx

They are both namespaced to DotNetNuke.Modules.Admin.Authentication. I simply changed the one in AuthenticationServices to DotNetNuke.Modules.AuthenticationServices. I then changed its Login.ascx to Inherits="DotNetNuke.Modules.AuthenticationServices.Login" from Inherits="DotNetNuke.Modules.Admin.Authentication.Login". I also had to add or change using statements throughout the application to import the new namespaces. Overall there were not an undue number of these changes and it took me around an hour or two to get the entire website compiling.

Libraries

Next I took all the DLLs that the project directly referenced out of the bin folder of the website and added them to source control in a Lib folder. This folder was adjacent to my Src folder in TFS. These files will automatically get copied to the bin folder when the application is built by the “copy local” mechanics. However, there were several binary files that are required by the DNN site that it does not reference directly. What I mean is that the project will build without the reference but it will not run correctly if they are not found in the bin folder. I am not familiar with DNN, so I simply assume they are plugins of some kind.

For these I created a Binaries folder within the Src folder. So I ended up with something like

Src/

Binaries/

Providers/

DotNetNuke.ASP2MenuNavigationProvider.dll

DotNetNuke.DNNDropDownNavigationProvider.dll

...

CNVelocity.dll

DNNGo.Modules.DNNGallery.dll

...

Lib/

DotNetNuke.Web.dll

...

In the project file I added a new target to copy the binaries to the bin folder when the project is built. I put the following code at the bottom of the csproj file:

<Target Name="CopyBinaries" AfterTargets="CopyFilesToOutputDirectory">

<ItemGroup>

<Providers Include="$(MSBuildProjectDirectory)\Binaries\Providers\*.*" />

<Binaries Include="$(MSBuildProjectDirectory)\Binaries\*.dll" />

</ItemGroup>

<Copy SourceFiles="@(Binaries)" DestinationFolder="$(OutDir)" ContinueOnError="true" />

<Copy SourceFiles="@(Providers)" DestinationFolder="$(OutDir)\Providers" ContinueOnError="true" />

</Target>

This is nice because it works from both Visual Studio and TFS Build (with its default Binaries output directory).

At this point the project can be built in both and the output is exactly what can be copied to IIS under a web application folder. The next step is getting it packaged for Octopus.

Packaging for Octopus

Packaging for Octopus is very straightforward. It really is just a nupkg with everything at the root. (I have created them by simply calling NuGet.exe on the command-line with PowerShell.)

OctoPack

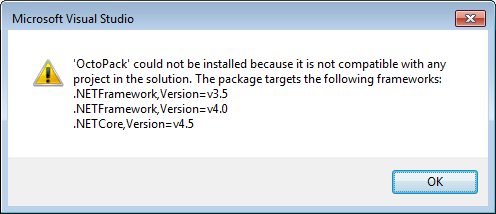

The Octopus Deploy team distributes a tool, called OctoPack, for packaging your build as a nupkg. I highly recommend at least attempting to implement the build using OctoPack, before continuing down our custom route.

Extending OctoPack

As I said earlier, we have an existing process for packaging .NET projects as part of our TFS Build system. The nice thing is that it also works without TFS Build.

It boils down to hooking into a somewhat undocumented way to extend MSBuild. Essentially you call MSBuild and pass it a property pointing to another MSBuild file that you extend the build with:

msbuild.exe solution.sln /property:CustomAfterMicrosoftCommonTargets=custom.proj

This is a really elegant way to extend multiple projects or solutions without having to modify them individually.

In this case, the custom.proj file contains the code necessary to build the Octopus Deploy package. (I am going to gloss over some of the details as I did not author this part of the process.)

First you need to reference the OctoPack MSBuild tasks:

<UsingTask TaskName="OctoPack.Tasks.CreateOctoPackPackage" AssemblyFile="OctoPack\targets\OctoPack.Tasks.dll" />

Then in a custom target, call CreateOctoPackPackage:

<CreateOctoPackPackage

NuSpecFileName="$(OctoPackNuSpecFileName)"

ContentFiles="@(Content)"

OutDir="$(OctoPackBinFiles)"

ProjectDirectory="$(MSBuildProjectDirectory)"

ProjectName="$(MSBuildProjectName)"

PackageVersion="$(OctoPackPackageVersion)"

PrimaryOutputAssembly="$(TargetPath)"

ReleaseNotesFile="$(OctoPackReleaseNotesFile)"

NuGetExePath="$(OctoPackNuGetExePath)"

>

<Output TaskParameter="Packages" ItemName="OctoPackBuiltPackages" />

<Output TaskParameter="NuGetExePath" PropertyName="OctoPackNuGetExePath" />

</CreateOctoPackPackage>

And copy the output somewhere:

<Copy SourceFiles="@(OctoPackBuiltPackages)" DestinationFolder="$(OctoPackPublishPackageToFileShare)" Condition="'$(OctoPackPublishPackageToFileShare)' != ''" />

The above code was pulled from the default OctoPack target and modified to fit our needs. Essentially we hacked it to uses our versioning scheme and we modify the OutDir to vary depending on the project type (SSDT Database Project, Console Application, or Web Site/Service). That variation is done elsewhere in our “custom.proj” file.

Finally, you just need to call MSBuild with additional commandline parameters:

/p:RunOctoPack=true /p:OctoPackPublishPackageToFileShare="[Octopus Feed]" /p:CustomAfterMicrosoftCommonTargets="[Custom.proj]"

You can add the above to your TFS Build Defintion by entering it in the MSBuild Arguments field under Advanced:

Modules

Most likely you will also have custom DNN modules that need to be deployed as part of the site. Since we already converted DNN to a web application, the obvious choice for this is to package them as NuGet packages and then reference them from the DNN project.

The details of packaging .NET project as a NuGet are outside the scope of this article and are well documented elsewhere. The key is that you want to build the modules as NuGet packages and deploy them to your internal NuGet repository.

Now there are two ways to handle the content files for modules:

- Include it in the DNN project

- Include it in the module NuGet

There are drawbacks to both approaches.

In the first scenario, you physically nest the module projects within the DesktopModules folder of the DNN project. You then include the files in the DNN project and change their Build Action property to Content. You must also change their Build Action property to None in the module project, so that they do not get packaged in the NuGet.

To summarize, in the first scenario, the module content is part of the DNN project and not the NuGet. The NuGet is used solely to add the DLL file to the DNN project.

The drawback here is that the module NuGets are not truly self contained and it is a complicated process to get right. Especially if you have many developers.

Despite the downsides, we took this approach. The benefit is that developers can compile the DLLs directly into the DNN site’s bin folder and run the site locally without first packaging the NuGets.

In the second scenario, you include the module content in the NuGet package. Because of this, you have to host your module projects outside of the DNN project folder structure. If they are not stored elsewhere, the NuGet packages will be deploying the content to the exact same location it is already found. However, if the content is in the NuGet, by default it will be packaged such that it is deployed with the same layout as the .NET projects. This means that they will not be deployed into the correct location. For example, if you have the following project layout:

Module/

Module.proj

View.ascx

bin/

Module.dll

You will get a NuGet with the layout:

lib/

net40/

Module.dll

content/

View.ascx

This will deposit the View.ascx in the root of your DNN site!

To override the default behaviour, you need a custom nuspec file that tells the NuGet where the files should go. For example:

<files>

<file src="View.ascx" target="DesktopModules\Custom\Module\View.ascx" />

</files>

And herein lies the major drawback of this approach: you have to maintain custom nuspec files. Once you add the <files> section, you have to specify all the files you need to include. This becomes a pain to maintain. Furthermore, you have to build and update your modules to see changes, even on your development machine.

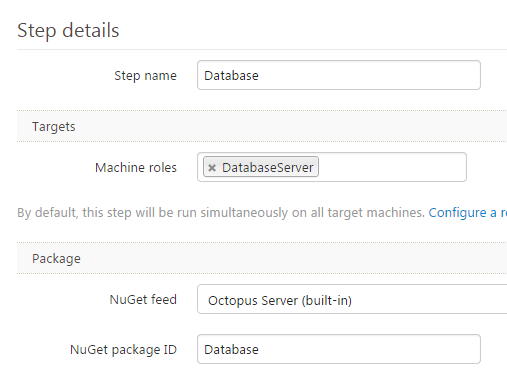

DNN Database

Schema

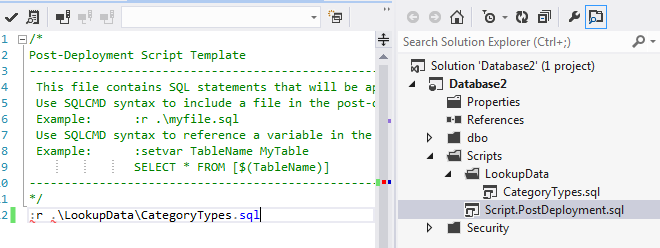

Clearly DNN also has a database to deploy. I took the extra step to include this in our deployment automation. I would say that this step is optional in the grand scheme of things, but I will describe it nonetheless.

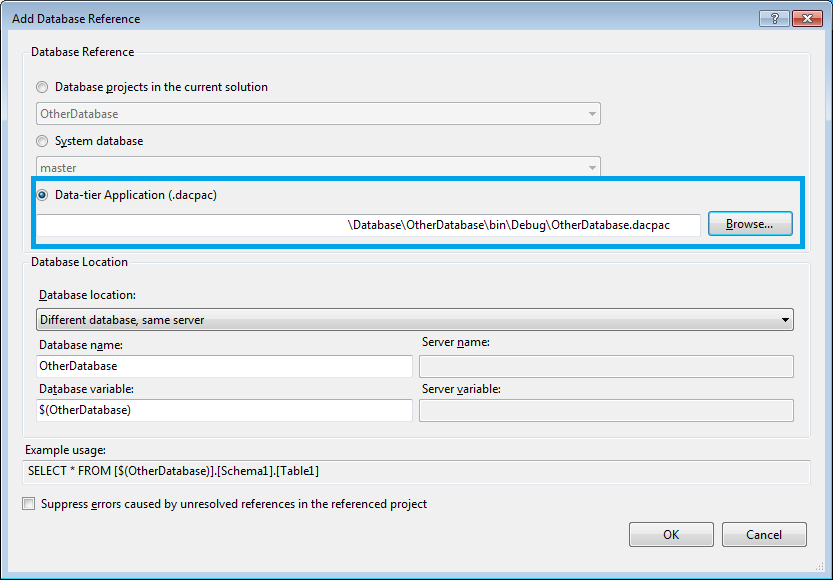

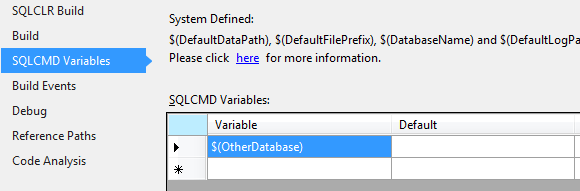

I used the excellent Sql Server Data Tools (SSDT) to reverse engineer the database schema into a .NET project. I included that project in the DNN website solution. Technet has a nice step-by-step tutorial for reverse engineering a database with SSDT.

Deploying a dacpac is a topic on its own. However, in summary, you package the nupkg with the dacpac and a Deploy.ps1 that calls SqlPackage.exe. It is not particularly complicated.

Data

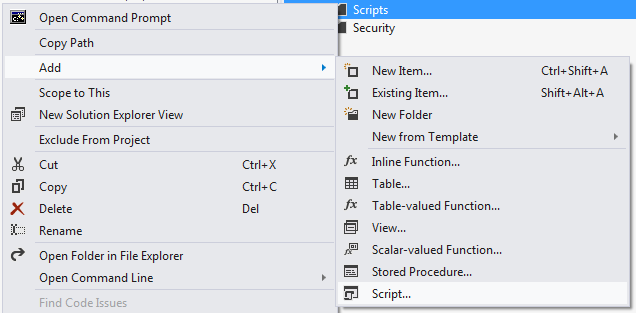

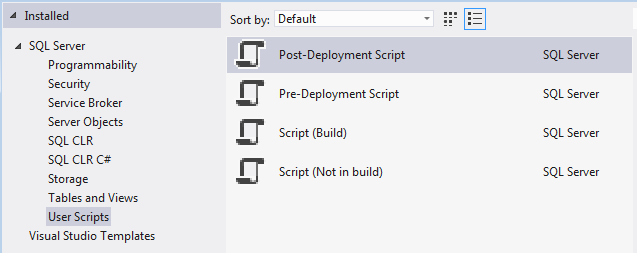

Once you have the schema deploying, the immediate next question is how to deploy the data changes. For example, installing modules, adding page sections, adding modules to pages, etc… all change the site configuration data stored in the database.

The strategy here is to have a master reference database that reflects the configuration of your production database. When a new development cycle starts, you must make a clone of this database. All configuration, throughout the cycle, is done to the clone database. When the development cycle is “complete” (are we ever really done?) and you are ready to create a release, you compare the two databases and generate a migration script. In my case, I was able to use the SSDT Data Compare tool to successfully generate these scripts.

Once you’ve generated the script, you can either deploy it manually as part of the overall deployment process, or you can add it as a Post-Deployment script in your SSDT database project. Be aware that doing so can be problematic. By default dacpacs will take any version of your database to the new version. These data scripts will only operate between two specific versions.

The final step in the cycle would be to apply the script to your reference database and re-clone it, starting the cycle again.

Would I do it Again?

So, given my disclaimer and that crazy sequence of steps, you are probably wondering if there is an easier way. I think that there may be. At this point, all I know is that the process I laid out here does work. We have deployed to production multiple times now.

However, I do suspect that leaving the out the conversion to a web application would have saved a lot of time and headache. I honestly do not see why just bundling the entire site in a NuGet package would not work. Under that scenario, I would leave the modules under the DNN site’s tree structure and just zip the entire thing up in one package. As laid out by Paul, one could simply publish the site and call NuGet.exe on it. You would have to create a nuspec file. It remains to be seen how onerous that would be (there are times where you can get away with just the metadata section).

Hooking this process into the build would be a bit more complicated. First you would have to build the modules into the site’s bin directory. Then you would have publish the website to a local folder. Finally, you would have to package the results with NuGet.exe. Since we were using TFS and TFS’s Build Process Templates are, quite possibly, the worse build system ever devised, we chose webapp conversion route. Looking back I am still undecided as to which would take more time, the conversion or the custom build process template.